Hi! I'm Lala, an architect and product designer based in Toronto. UX Anthropologist is an unfiltered record of design discourse in UX, architecture, and AI — dissecting the cultural and human dimensions of data in the era of AI.

“Are you for or against AI?” A question I’ve seen often on Substack.

Yes, I’m all for using AI responsibly to do productive work, but

No, I’m against a dystopian future where robots take over the world…?

But does that mean I shouldn’t use AI or support it to become more “intelligent”?

And, what does “intelligence” even mean?

So, really, I think the question is: What is AI, really?

The Problem: the AI Buzzword

AI is no longer a technical term among tech workers, but a buzzword for companies to attract funds.

Although AI has been around for decades, with the term “Artificial Intelligence” first being coined back in 1956, the actual “thing” itself has only gained popularity in the recent 3-5 years.

For many people, myself included, first heard about AI’s shocking capabilities through the groundbreaking news of ChatGPT coming out.

“It thinks and responds to you like a human!”

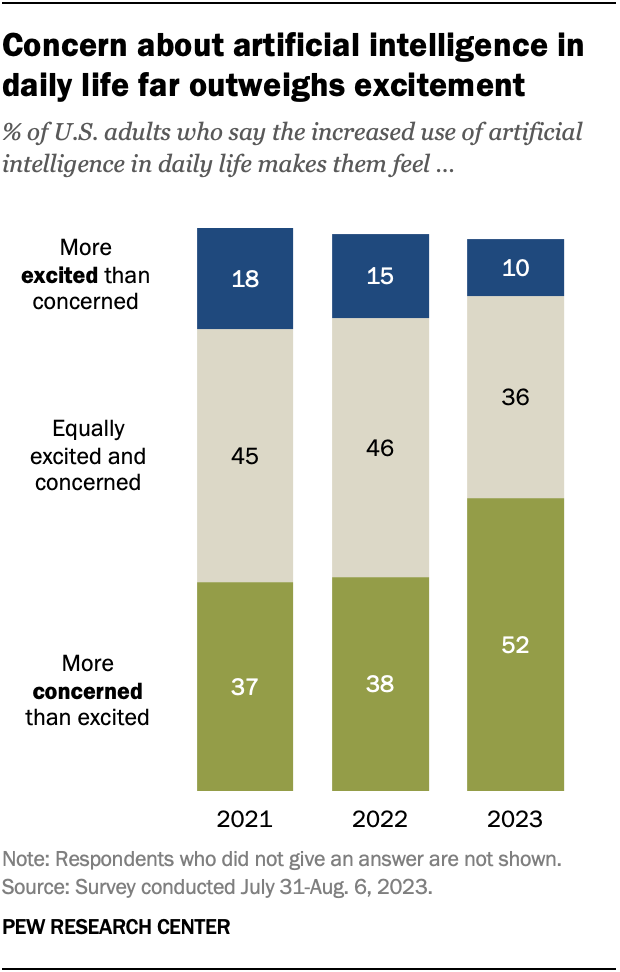

Not knowing what tokens, embeddings, and attention mechanism were, I was pretty freaked out. And I was not alone.

We didn’t all learn AI at school like we learned math or language. People who really understands AI today would’ve learned about it through Youtube videos (by Andrej Karpathy, for example) or podcasts (like Lenny’s Podcast, of course!), or newsletters, the list goes on… Or even simply by using AI products and features consistently!

While these probably seem like the baseline for you, is not everyone who want to spend the time for it, or worse, don’t know where AI is present in their day-to-day lives. Hence, consciously using AI is key.

Companies that scream: AI is the future and so is us, stakeholders! while poorly incorporating more AI-functions that no one asked for — are not exactly helping.

Then, without being pro-/anti-AI, what is AI?

Note: this discourse have no intention to help AI “clear its name”, but will provide a relatively objective view to what AI is in non-technical terms, and how we could do about it and with it.

Artificial, Collective Intelligence?

Generally, the response you’d get if you were to ask Google/Perplexity/Claude 👇

Artificial intelligence (AI) refers to the development of computer systems that can perform tasks typically requiring human intelligence, including learning, reasoning, problem-solving, perception, language understanding, and decision-making.

For many people and myself once, this definition has made me believe in futures in movies like I, Robot or games like Detroit: Become Human.

At the same time, the rapid advancement of LLMs render these these popular fictions true with its human-like responses and the occasional news of “AI acting on its own!”. But, Artificial Intelligence ≠ Human Intelligence.

(Etymology becomes a crucial aspect here!)

Sari Azout, founder of Sublime, coined a new term for AI:

“Collective Intelligence”

She explains that AI is basically a massive collection of the lessons learned by humans, so that it is essentially the collective intelligence of humans.

See her full speech at Sana AI Summit 2025.

Essentially,

Artificial Intelligence only describes the outlook of AI, not the core.

So, if we were to complete the definition of AI:

Artificial intelligence (AI) refers to the development of computer systems that can perform tasks typically requiring human intelligence, including learning, reasoning, problem-solving, perception, language understanding, and decision-making…

AI is a product of human engineering, constructed from algorithms, data, and computational hardware retrieved from and built by humans, and designed to mimic humans functionings.

Rebuttal: “How do you know AI doesn’t have consciousness?”

Geoffrey Hinton, the “Godfather of AI”, says

“We don’t know!”

Hinton is one of the well-known leaders that has a pessimistic view on the future of AI. While he warns about

the threat of AI surpassing human intelligence (which, technically, it already does because it can process information at much faster speed and grander scale), and

the incoming seismic shifts of the job market (as we all are aware of),

He remains agnostic on whether AI could gain consciousness.

Ultimately, his true concern lies in the bad (human) actors that would use AI unethically, such as using it as a military weapon.

So, his message is actually:

“Humanity + AI” threatens the future of humanity, not AI alone.

What we can do is to use this tool consciously — know where you are using AI, what benefits it brings you, what must be regulated, and what is irreplacable that is human.

These are challenging questions that require long term discussions, but the least we can do is to recognize where we use AI on the daily.

AI Applications that I Bet You Use

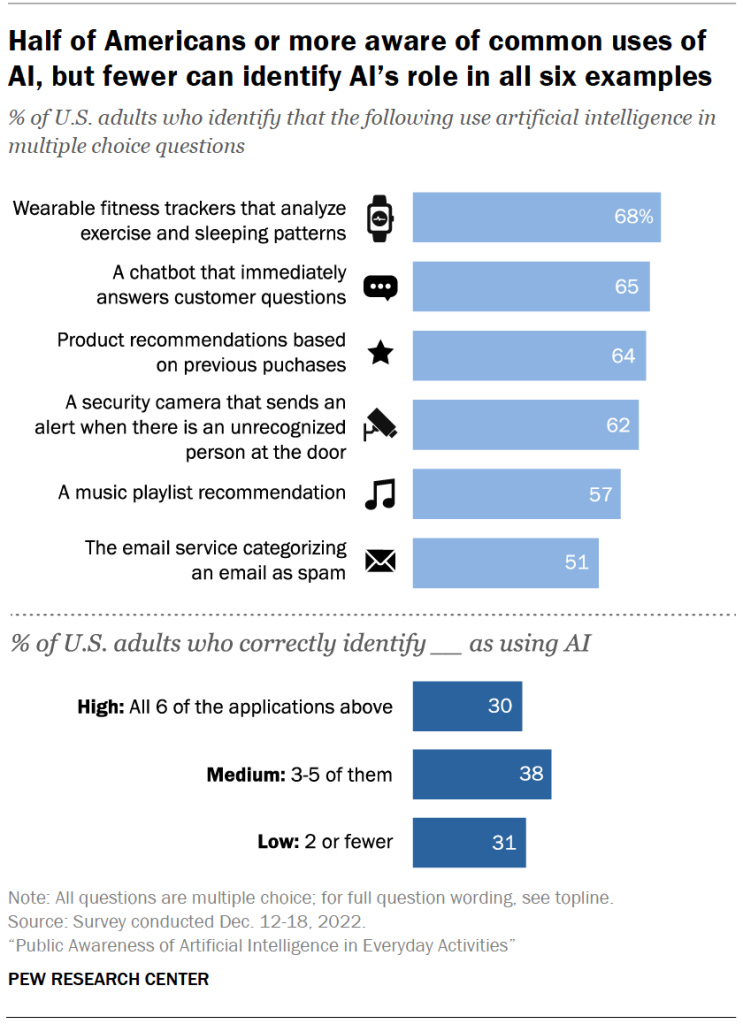

Common misconception: AI = LLM.

LLMs are like the “face” of AI.

While LLMs like ChatGPT is one of the most common General AI applications, the term “AI” actually encompasses much more than this.

The are two main categories in AI:

General AI: Can understand, learn, and apply intelligence across a broad range of tasks — Plot twist: true AGI (Artificial General Intelligence) doesn’t exist yet!

Narrow AI: Specialized for specific tasks. Most real-world AI today fits this category! They are features like:

Facial & voice recognition

Algorithm on Instagram, Tiktok, Spotify, Netflix, HBO, etc.

Email spam filters

Any search engines

Any navigation apps

Chatbots (yeb — they are not AGI!)

Don’t worry if you didn’t know these feature and products are AI… because you’re not alone! At least not in the States, as it is the only country that conducts studies like these at the moment.

My point is, AI have been in our lives for decades now, and they are not as scary as they are often imagined to be. The risks of AI are as true as the fact that we all have been using it for years now.

To me, the most important part is for everyone to recognize where and how we use AI. Only then, we could alleviate the risks, leverage the benefits, and navigate the future of this delicate yet incredibly powerful tool.

See you at the next discourse, “UX Designers’ New Role: AI Broker” on my prediction of UX Designers’ future role in the era of AI!

Love that you’re making this clear for anyone who hasn’t dipped their toe in yet.

I hope to do the same :)

I do want people to use it because we’re still customers, and our (paid) usage can encourage ethical development. OK I’ll get off my soap box now.

This piece left me both stirred and sobered. The future of AI isn’t just a tech debate; it’s a test of whether we prioritize human sovereignty or default to convenience engineered by corporate design. Personal AI isn’t about novelty; it’s about reclaiming agency in a system built to dilute it.